R package for automation of machine learning, forecasting, feature engineering, model evaluation, model interpretation, recommenders, and EDA.

AutoQuant Reference Manual

Companion Packages:

Table of Contents

Documentation + Code Examples

Background

Expand to view content

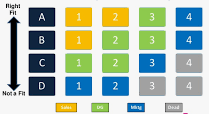

Automated Machine Learning - In my view, AutoML should consist of functions to help make professional model development and operationalization more efficient. The functions in this package are there to help no matter which part of the ML lifecycle you are working on. The functions in this package have been tested across a variety of industries and have consistently outperformed competing methods.

Package Details

Supervised Learning - Currently, I’m utilizing CatBoost, LightGBM, XGBoost, and H2O for all of the automated Machine Learning related functions. GPU’s can be utilized with CatBoost, LightGBM, and XGBoost, while those and the H2O models can all utilize 100% of CPU. Multi-armed bandit grid tuning is available for CatBoost, LightGBM, and XGBoost models, which utilize the concept of randomized probability matching, which is detailed in the R pacakge “bandit”. My choice of included ML algorithms in the package is based on previous success when compared against other algorithms on real world use cases, the additional utilities these packages offer aside from accurate predictions, their ability to work on big data, and the fact that they’re available in both R and Python which makes managing multiple languages a little more seamless in a professional setting.

Documentation - Each exported function in the package has a help file and can be viewed in your RStudio session, e.g.

?Rodeo::ModelDataPrep. Many of them come with examples coded up in the help files (at the bottom) that you can run to get a feel for how to set the parameters. There’s also a listing of exported functions by category with code examples at the bottom of this readme. You can also jump into the R folder here to dig into the source code.

Overall process: Typically, I go to the warehouse to get all of my base features and then I run through all the relevant feature engineering functions in this package. Personally, I set up templates for features engineering, model training optimization, and model scoring (including feature engineering for scoring). I collect all relevant metdata in a list that is shared across templates and as a result, I never have to touch the model scoring template, which makes operationalize and maintenace a breeze. I can simply list out the columns of interest, which feature engineering functions I want to utilize, and then I simply kick off some command line scripts and everything else is automatically managed.

Installation

The Description File is designed to require only the minimum number of packages to install AutoQuant. However, in order to utilize most of the functions in the package, you’ll have to install additional libraries. I set it up this way on purpose. You don’t need to install every single possible dependency if you are only interested in using a few of the functions. For example, if you only want to use CatBoost then install the catboost package and forget about the h2o, xgboost, and lightgbm packages. This is one of the primary benefits of not hosting an R package on cran, as they require dependencies to be part of the Imports section on the Description File, which subsequently requires users to have all dependencies installed in order to install the package.

The minimal set of packages that need to be installed are below. The full list can be found by expanding the section (Expand to view content).

- bit64

- data.table

- doParallel

- foreach

- lubridate

- timeDate

# Core pacakges

if(!("data.table" %in% rownames(installed.packages()))) install.packages("data.table"); print("data.table")

if(!("collapse" %in% rownames(installed.packages()))) install.packages("collapse"); print("collapse")

if(!("bit64" %in% rownames(installed.packages()))) install.packages("bit64"); print("bit64")

if(!("devtools" %in% rownames(installed.packages()))) install.packages("devtools"); print("devtools")

if(!("doParallel" %in% rownames(installed.packages()))) install.packages("doParallel"); print("doParallel")

if(!("foreach" %in% rownames(installed.packages()))) install.packages("foreach"); print("foreach")

if(!("lubridate" %in% rownames(installed.packages()))) install.packages("lubridate"); print("lubridate")

if(!("timeDate" %in% rownames(installed.packages()))) install.packages("timeDate"); print("timeDate")

# AutoQuant

devtools::install_github('AdrianAntico/AutoQuant', upgrade = FALSE, dependencies = FALSE, force = TRUE)

Additional Packages to Install

Install ALL R package dependencies for all functions:

XGBoost and LightGBM can be used with GPU. However, their installation is much more involved than CatBoost, which comes with GPU capabilities simply by installing their package. The installation instructions for them below is for the CPU version only. Refer to each’s home page for instructions for installing for GPU.

# Install Dependencies----

if(!("devtools" %in% rownames(installed.packages()))) install.packages("devtools"); print("devtools")

# Core pacakges

if(!("data.table" %in% rownames(installed.packages()))) install.packages("data.table"); print("data.table")

if(!("collapse" %in% rownames(installed.packages()))) install.packages("collapse"); print("collapse")

if(!("bit64" %in% rownames(installed.packages()))) install.packages("bit64"); print("bit64")

if(!("devtools" %in% rownames(installed.packages()))) install.packages("devtools"); print("devtools")

if(!("doParallel" %in% rownames(installed.packages()))) install.packages("doParallel"); print("doParallel")

if(!("foreach" %in% rownames(installed.packages()))) install.packages("foreach"); print("foreach")

if(!("lubridate" %in% rownames(installed.packages()))) install.packages("lubridate"); print("lubridate")

if(!("timeDate" %in% rownames(installed.packages()))) install.packages("timeDate"); print("timeDate")

# Additional dependencies for specific use cases

if(!("combinat" %in% rownames(installed.packages()))) install.packages("combinat"); print("combinat")

if(!("DBI" %in% rownames(installed.packages()))) install.packages("DBI"); print("DBI")

if(!("e1071" %in% rownames(installed.packages()))) install.packages("e1071"); print("e1071")

if(!("fBasics" %in% rownames(installed.packages()))) install.packages("fBasics"); print("fBasics")

if(!("forecast" %in% rownames(installed.packages()))) install.packages("forecast"); print("forecast")

if(!("fpp" %in% rownames(installed.packages()))) install.packages("fpp"); print("fpp")

if(!("ggplot2" %in% rownames(installed.packages()))) install.packages("ggplot2"); print("ggplot2")

if(!("gridExtra" %in% rownames(installed.packages()))) install.packages("gridExtra"); print("gridExtra")

if(!("itertools" %in% rownames(installed.packages()))) install.packages("itertools"); print("itertools")

if(!("MLmetrics" %in% rownames(installed.packages()))) install.packages("MLmetrics"); print("MLmetrics")

if(!("nortest" %in% rownames(installed.packages()))) install.packages("nortest"); print("nortest")

if(!("pROC" %in% rownames(installed.packages()))) install.packages("pROC"); print("pROC")

if(!("RColorBrewer" %in% rownames(installed.packages()))) install.packages("RColorBrewer"); print("RColorBrewer")

if(!("recommenderlab" %in% rownames(installed.packages()))) install.packages("recommenderlab"); print("recommenderlab")

if(!("RPostgres" %in% rownames(installed.packages()))) install.packages("RPostgres"); print("RPostgres")

if(!("Rfast" %in% rownames(installed.packages()))) install.packages("Rfast"); print("Rfast")

if(!("scatterplot3d" %in% rownames(installed.packages()))) install.packages("scatterplot3d"); print("scatterplot3d")

if(!("stringr" %in% rownames(installed.packages()))) install.packages("stringr"); print("stringr")

if(!("tsoutliers" %in% rownames(installed.packages()))) install.packages("tsoutliers"); print("tsoutliers")

if(!("xgboost" %in% rownames(installed.packages()))) install.packages("xgboost"); print("xgboost")

if(!("lightgbm" %in% rownames(installed.packages()))) install.packages("lightgbm"); print("lightgbm")

if(!("regmedint" %in% rownames(installed.packages()))) install.packages("regmedint"); print("regmedint")

for(pkg in c("RCurl","jsonlite")) if (! (pkg %in% rownames(installed.packages()))) { install.packages(pkg) }

install.packages("h2o", type = "source", repos = (c("http://h2o-release.s3.amazonaws.com/h2o/latest_stable_R")))

devtools::install_github('catboost/catboost', subdir = 'catboost/R-package')

# Dependencies for ML Reports

if(!("reactable" %in% rownames(installed.packages()))) install.packages("reactable"); print("reactable")

devtools::install_github('AdrianAntico/prettydoc', upgrade = FALSE, dependencies = FALSE, force = TRUE)

# And lastly, AutoQuant

devtools::install_github('AdrianAntico/AutoQuant', upgrade = FALSE, dependencies = FALSE, force = TRUE)

Installation Troubleshooting

The most common issue some users are having when trying to install AutoQuant is the installation of the catboost package dependency. Since catboost is not on CRAN it can only be installed through GitHub. To install catboost without error (and consequently install AutoQuant without error), try running this line of code first, then restart your R session, then re-run the 2-step installation process above. (Reference):

If you’re still having trouble submit an issue and I’ll work with you to get it installed.

# Method for on premise servers

options(devtools.install.args = c("--no-multiarch", "--no-test-load"))

install.packages("https://github.com/catboost/catboost/releases/download/<version>/catboost-R-Windows-<version>.tgz", repos = NULL, type = "source", INSTALL_opts = c("--no-multiarch", "--no-test-load"))

# Method for azure machine learning Designer pipelines

## catboost

install.packages("https://github.com/catboost/catboost/releases/download/<version>/catboost-R-Windows-<version>.tgz", repos = NULL, type = "source", INSTALL_opts = c("--no-multiarch", "--no-test-load"))

## AutoQuant

install.packages("https://github.com/AdrianAntico/AutoQuant/archive/refs/tags/<version>.tar.gz", repos = NULL, type = "source", INSTALL_opts = c("--no-multiarch", "--no-test-load"))

Usage

Supervised Learning

Expand to view content

Regression

click to expand

The Auto_Regression() models handle a multitude of tasks. In order:Regression Description

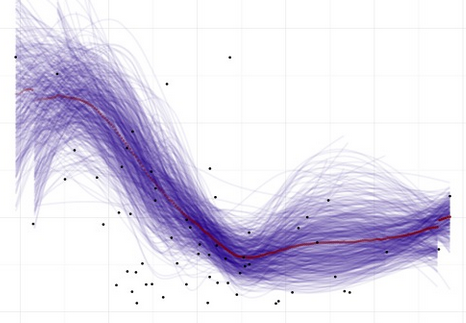

AutoTransformationCreate() functionAutoDataPartition() function, if you didn’t supply those directly to the functionAutoXGBoostRegression()) and save the factor levels for scoring in a way that guarentees consistency across training, validation, and test data sets, utilizing the DummifyDT() functionEvalPlot() functionParDepPlots() function

CatBoost Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 10000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoCatBoostRegression(

# GPU or CPU and the number of available GPUs

TrainOnFull = FALSE,

task_type = 'GPU',

NumGPUs = 1,

DebugMode = FALSE,

# Metadata args

OutputSelection = c('Importances', 'EvalPlots', 'EvalMetrics', 'Score_TrainData'),

ModelID = 'Test_Model_1',

model_path = normalizePath('./'),

metadata_path = normalizePath('./'),

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

ReturnModelObjects = TRUE,

# Data args

data = data,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in%

c('IDcol_1', 'IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = 'Adrian',

Methods = c('BoxCox', 'Asinh', 'Asin', 'Log',

'LogPlus1', 'Sqrt', 'Logit'),

# Model evaluation

eval_metric = 'RMSE',

eval_metric_value = 1.5,

loss_function = 'RMSE',

loss_function_value = 1.5,

MetricPeriods = 10L,

NumOfParDepPlots = ncol(data)-1L-2L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 60*60,

BaselineComparison = 'default',

# ML args

langevin = FALSE,

diffusion_temperature = 10000,

Trees = 1000,

Depth = 9,

L2_Leaf_Reg = NULL,

RandomStrength = 1,

BorderCount = 128,

LearningRate = NULL,

RSM = 1,

BootStrapType = NULL,

GrowPolicy = 'SymmetricTree',

model_size_reg = 0.5,

feature_border_type = 'GreedyLogSum',

sampling_unit = 'Object',

subsample = NULL,

score_function = 'Cosine',

min_data_in_leaf = 1)

XGBoost Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoXGBoostRegression(

# GPU or CPU

TreeMethod = 'hist',

NThreads = parallel::detectCores(),

LossFunction = 'reg:squarederror',

# Metadata args

OutputSelection = c('Importances', 'EvalPlots', 'EvalMetrics', 'Score_TrainData'),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

EncodingMethod = "binary",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c('IDcol_1','IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = 'Adrian',

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# Model evaluation args

eval_metric = 'rmse',

NumOfParDepPlots = 3L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

grid_eval_metric = 'r2',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

Verbose = 1L,

# ML args

Trees = 50L,

eta = 0.05,

max_depth = 4L,

min_child_weight = 1.0,

subsample = 0.55,

colsample_bytree = 0.55)

LightGBM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoLightGBMRegression(

# Metadata args

OutputSelection = c('Importances','EvalPlots','EvalMetrics','Score_TrainData'),

model_path = normalizePath('./'),

metadata_path = NULL,

ModelID = 'Test_Model_1',

NumOfParDepPlots = 3L,

EncodingMethod = 'credibility',

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in% c('IDcol_1', 'IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = NULL,

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# Grid parameters

GridTune = FALSE,

grid_eval_metric = 'r2',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

PassInGrid = NULL,

# Core parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#core-parameters

input_model = NULL, # continue training a model that is stored to file

task = 'train',

device_type = 'CPU',

NThreads = parallel::detectCores() / 2,

objective = 'regression',

metric = 'rmse',

boosting = 'gbdt',

LinearTree = FALSE,

Trees = 50L,

eta = NULL,

num_leaves = 31,

deterministic = TRUE,

# Learning Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#learning-control-parameters

force_col_wise = FALSE,

force_row_wise = FALSE,

max_depth = NULL,

min_data_in_leaf = 20,

min_sum_hessian_in_leaf = 0.001,

bagging_freq = 0,

bagging_fraction = 1.0,

feature_fraction = 1.0,

feature_fraction_bynode = 1.0,

extra_trees = FALSE,

early_stopping_round = 10,

first_metric_only = TRUE,

max_delta_step = 0.0,

lambda_l1 = 0.0,

lambda_l2 = 0.0,

linear_lambda = 0.0,

min_gain_to_split = 0,

drop_rate_dart = 0.10,

max_drop_dart = 50,

skip_drop_dart = 0.50,

uniform_drop_dart = FALSE,

top_rate_goss = FALSE,

other_rate_goss = FALSE,

monotone_constraints = NULL,

monotone_constraints_method = 'advanced',

monotone_penalty = 0.0,

forcedsplits_filename = NULL, # use for AutoStack option; .json file

refit_decay_rate = 0.90,

path_smooth = 0.0,

# IO Dataset Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#io-parameters

max_bin = 255,

min_data_in_bin = 3,

data_random_seed = 1,

is_enable_sparse = TRUE,

enable_bundle = TRUE,

use_missing = TRUE,

zero_as_missing = FALSE,

two_round = FALSE,

# Convert Parameters

convert_model = NULL,

convert_model_language = 'cpp',

# Objective Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#objective-parameters

boost_from_average = TRUE,

alpha = 0.90,

fair_c = 1.0,

poisson_max_delta_step = 0.70,

tweedie_variance_power = 1.5,

lambdarank_truncation_level = 30,

# Metric Parameters (metric is in Core)

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#metric-parameters

is_provide_training_metric = TRUE,

eval_at = c(1,2,3,4,5),

# Network Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#network-parameters

num_machines = 1,

# GPU Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#gpu-parameters

gpu_platform_id = -1,

gpu_device_id = -1,

gpu_use_dp = TRUE,

num_gpu = 1)

H2O-GBM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoH2oGBMRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./")),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in% c('IDcol_1','IDcol_2','Adrian')],

WeightsColumn = NULL,

TransformNumericColumns = NULL,

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# ML grid tuning args

GridTune = FALSE,

GridStrategy = "Cartesian",

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

MaxModelsInGrid = 2,

# Model args

Trees = 50,

LearnRate = 0.10,

LearnRateAnnealing = 1,

eval_metric = "RMSE",

Alpha = NULL,

Distribution = "poisson",

MaxDepth = 20,

SampleRate = 0.632,

ColSampleRate = 1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-DRF Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoH2oDRFRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1L, parallel::detectCores() - 2L),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data Args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Sqrt", "Logit"),

# Grid Tuning Args

GridStrategy = "Cartesian",

GridTune = FALSE,

MaxModelsInGrid = 10,

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

# ML Args

Trees = 50,

MaxDepth = 20,

SampleRate = 0.632,

MTries = -1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-GLM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoH2oGLMRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments:

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

RandomColNumbers = NULL,

InteractionColNumbers = NULL,

WeightsColumn = NULL,

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Sqrt", "Logit"),

# Model args

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "gaussian",

Link = "identity",

TweedieLinkPower = NULL,

TweedieVariancePower = NULL,

RandomDistribution = NULL,

RandomLink = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

H2O-AutoML Example

# Create some dummy correlated data with numeric and categorical features

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoH2oMLRegression(

# Compute management

MaxMem = "32G",

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

IfSaveModel = "mojo",

# Model evaluation

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

# Data arguments

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Logit"),

# Model args

GridTune = FALSE,

ExcludeAlgos = NULL,

Trees = 50,

MaxModelsInGrid = 10)

H2O-GAM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Define GAM Columns to use - up to 9 are allowed

GamCols <- names(which(unlist(lapply(data, is.numeric))))

GamCols <- GamCols[!GamCols %in% c("Adrian","IDcol_1","IDcol_2")]

GamCols <- GamCols[1L:(min(9L,length(GamCols)))]

# Run function

TestModel <- AutoQuant::AutoH2oGAMRegression(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "RMSE",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments:

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

InteractionColNumbers = NULL,

WeightsColumn = NULL,

GamColNames = GamCols,

TransformNumericColumns = NULL,

Methods = c("Asinh", "Asin", "Log", "LogPlus1", "Sqrt", "Logit"),

# Model args

num_knots = NULL,

keep_gam_cols = TRUE,

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "gaussian",

Link = "Family_Default",

TweedieLinkPower = NULL,

TweedieVariancePower = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

Binary Classification

click to expand

The Auto_Classifier() models handle a multitude of tasks. In order:Classification Description

CatBoost Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 10000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoCatBoostClassifier(

# GPU or CPU and the number of available GPUs

task_type = 'GPU',

NumGPUs = 1,

TrainOnFull = FALSE,

DebugMode = FALSE,

# Metadata args

OutputSelection = c('Score_TrainData', 'Importance', 'EvalPlots', 'Metrics', 'PDF'),

ModelID = 'Test_Model_1',

model_path = normalizePath('./'),

metadata_path = normalizePath('./'),

SaveModelObjects = FALSE,

ReturnModelObjects = TRUE,

SaveInfoToPDF = FALSE,

# Data args

data = data,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in%

c('IDcol_1','IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

# Evaluation args

ClassWeights = c(1L,1L),

CostMatrixWeights = c(1,0,0,1),

EvalMetric = 'AUC',

grid_eval_metric = 'MCC',

LossFunction = 'Logloss',

MetricPeriods = 10L,

NumOfParDepPlots = ncol(data)-1L-2L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

BaselineComparison = 'default',

# ML args

Trees = 1000,

Depth = 9,

LearningRate = NULL,

L2_Leaf_Reg = NULL,

model_size_reg = 0.5,

langevin = FALSE,

diffusion_temperature = 10000,

RandomStrength = 1,

BorderCount = 128,

RSM = 1,

BootStrapType = 'Bayesian',

GrowPolicy = 'SymmetricTree',

feature_border_type = 'GreedyLogSum',

sampling_unit = 'Object',

subsample = NULL,

score_function = 'Cosine',

min_data_in_leaf = 1)

XGBoost Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoXGBoostClassifier(

# GPU or CPU

TreeMethod = "hist",

NThreads = parallel::detectCores(),

# Metadata args

OutputSelection = c("Importances", "EvalPlots", "EvalMetrics", "PDFs", "Score_TrainData"),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

EncodingMethod = "binary",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in%

c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumnName = NULL,

IDcols = c("IDcol_1","IDcol_2"),

# Model evaluation

LossFunction = 'reg:logistic',

CostMatrixWeights = c(1,0,0,1),

eval_metric = "auc",

grid_eval_metric = "MCC",

NumOfParDepPlots = 3L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

BaselineComparison = "default",

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

Verbose = 1L,

# ML args

Trees = 500L,

eta = 0.30,

max_depth = 9L,

min_child_weight = 1.0,

subsample = 1,

colsample_bytree = 1,

DebugMode = FALSE)

LightGBM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoLightGBMClassifier(

# Metadata args

OutputSelection = c("Importances","EvalPlots","EvalMetrics","Score_TrainData"),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

NumOfParDepPlots = 3L,

EncodingMethod = "credibility",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c("IDcol_1","IDcol_2"),

# Grid parameters

GridTune = FALSE,

grid_eval_metric = 'Utility',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

PassInGrid = NULL,

# Core parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#core-parameters

input_model = NULL, # continue training a model that is stored to file

task = "train",

device_type = 'CPU',

NThreads = parallel::detectCores() / 2,

objective = 'binary',

metric = 'binary_logloss',

boosting = 'gbdt',

LinearTree = FALSE,

Trees = 50L,

eta = NULL,

num_leaves = 31,

deterministic = TRUE,

# Learning Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#learning-control-parameters

force_col_wise = FALSE,

force_row_wise = FALSE,

max_depth = NULL,

min_data_in_leaf = 20,

min_sum_hessian_in_leaf = 0.001,

bagging_freq = 0,

bagging_fraction = 1.0,

feature_fraction = 1.0,

feature_fraction_bynode = 1.0,

extra_trees = FALSE,

early_stopping_round = 10,

first_metric_only = TRUE,

max_delta_step = 0.0,

lambda_l1 = 0.0,

lambda_l2 = 0.0,

linear_lambda = 0.0,

min_gain_to_split = 0,

drop_rate_dart = 0.10,

max_drop_dart = 50,

skip_drop_dart = 0.50,

uniform_drop_dart = FALSE,

top_rate_goss = FALSE,

other_rate_goss = FALSE,

monotone_constraints = NULL,

monotone_constraints_method = "advanced",

monotone_penalty = 0.0,

forcedsplits_filename = NULL, # use for AutoStack option; .json file

refit_decay_rate = 0.90,

path_smooth = 0.0,

# IO Dataset Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#io-parameters

max_bin = 255,

min_data_in_bin = 3,

data_random_seed = 1,

is_enable_sparse = TRUE,

enable_bundle = TRUE,

use_missing = TRUE,

zero_as_missing = FALSE,

two_round = FALSE,

# Convert Parameters

convert_model = NULL,

convert_model_language = "cpp",

# Objective Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#objective-parameters

boost_from_average = TRUE,

is_unbalance = FALSE,

scale_pos_weight = 1.0,

# Metric Parameters (metric is in Core)

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#metric-parameters

is_provide_training_metric = TRUE,

eval_at = c(1,2,3,4,5),

# Network Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#network-parameters

num_machines = 1,

# GPU Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#gpu-parameters

gpu_platform_id = -1,

gpu_device_id = -1,

gpu_use_dp = TRUE,

num_gpu = 1)

H2O-GBM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

TestModel <- AutoQuant::AutoH2oGBMClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./")),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

# ML grid tuning args

GridTune = FALSE,

GridStrategy = "Cartesian",

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

MaxModelsInGrid = 2,

# Model args

Trees = 50,

LearnRate = 0.10,

LearnRateAnnealing = 1,

eval_metric = "auc",

Distribution = "bernoulli",

MaxDepth = 20,

SampleRate = 0.632,

ColSampleRate = 1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-DRF Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

TestModel <- AutoQuant::AutoH2oDRFClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1L, parallel::detectCores() - 2L),

IfSaveModel = "mojo",

H2OShutdown = FALSE,

H2OStartUp = TRUE,

# Metadata arguments:

eval_metric = "auc",

NumOfParDepPlots = 3L,

# Data arguments:

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Model evaluation:

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2", "Adrian")],

WeightsColumn = NULL,

# Grid Tuning Args

GridStrategy = "Cartesian",

GridTune = FALSE,

MaxModelsInGrid = 10,

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

# Model args

Trees = 50L,

MaxDepth = 20,

SampleRate = 0.632,

MTries = -1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-GLM Example

# Create some dummy correlated data with numeric and categorical features

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoH2oGLMClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation args

eval_metric = "auc",

NumOfParDepPlots = 3,

# Metadata args

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in%

c("IDcol_1", "IDcol_2","Adrian")],

RandomColNumbers = NULL,

InteractionColNumbers = NULL,

WeightsColumn = NULL,

# ML args

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "binomial",

Link = "logit",

RandomDistribution = NULL,

RandomLink = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

H2O-AutoML Example

# Create some dummy correlated data with numeric and categorical features

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

TestModel <- AutoQuant::AutoH2oMLClassifier(

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

ExcludeAlgos = NULL,

eval_metric = "auc",

Trees = 50,

MaxMem = "32G",

NThreads = max(1, parallel::detectCores()-2),

MaxModelsInGrid = 10,

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./"), "MetaData"),

ModelID = "FirstModel",

NumOfParDepPlots = 3,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

IfSaveModel = "mojo",

H2OShutdown = FALSE,

HurdleModel = FALSE)

H2O-GAM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = TRUE,

MultiClass = FALSE)

# Define GAM Columns to use - up to 9 are allowed

GamCols <- names(which(unlist(lapply(data, is.numeric))))

GamCols <- GamCols[!GamCols %in% c("Adrian","IDcol_1","IDcol_2")]

GamCols <- GamCols[1L:(min(9L,length(GamCols)))]

# Run function

TestModel <- AutoQuant::AutoH2oGAMClassifier(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "auc",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments:

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

GamColNames = GamCols,

# ML args

num_knots = NULL,

keep_gam_cols = TRUE,

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "binomial",

Link = "logit",

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

MultiClass Classification

click to expand

The Auto_MultiClass() models handle a multitude of tasks. In order:MultiClass Description

CatBoost Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 10000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = TRUE)

# Run function

TestModel <- AutoQuant::AutoCatBoostMultiClass(

# GPU or CPU and the number of available GPUs

task_type = 'GPU',

NumGPUs = 1,

TrainOnFull = FALSE,

DebugMode = FALSE,

# Metadata args

OutputSelection = c('Importances', 'EvalPlots', 'EvalMetrics', 'Score_TrainData'),

ModelID = 'Test_Model_1',

model_path = normalizePath('./'),

metadata_path = normalizePath('./'),

SaveModelObjects = FALSE,

ReturnModelObjects = TRUE,

# Data args

data = data,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = names(data)[!names(data) %in%

c('IDcol_1', 'IDcol_2','Adrian')],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

ClassWeights = c(1L,1L,1L,1L,1L),

IDcols = c('IDcol_1','IDcol_2'),

# Model evaluation

eval_metric = 'MCC',

loss_function = 'MultiClassOneVsAll',

grid_eval_metric = 'Accuracy',

MetricPeriods = 10L,

NumOfParDepPlots = 3,

# Grid tuning args

PassInGrid = NULL,

GridTune = TRUE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

BaselineComparison = 'default',

# ML args

langevin = FALSE,

diffusion_temperature = 10000,

Trees = seq(100L, 500L, 50L),

Depth = seq(4L, 8L, 1L),

LearningRate = seq(0.01,0.10,0.01),

L2_Leaf_Reg = seq(1.0, 10.0, 1.0),

RandomStrength = 1,

BorderCount = 254,

RSM = c(0.80, 0.85, 0.90, 0.95, 1.0),

BootStrapType = c('Bayesian', 'Bernoulli', 'Poisson', 'MVS', 'No'),

GrowPolicy = c('SymmetricTree', 'Depthwise', 'Lossguide'),

model_size_reg = 0.5,

feature_border_type = 'GreedyLogSum',

sampling_unit = 'Object',

subsample = NULL,

score_function = 'Cosine',

min_data_in_leaf = 1)

XGBoost Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = TRUE)

# Run function

TestModel <- AutoQuant::AutoXGBoostMultiClass(

# GPU or CPU

TreeMethod = "hist",

NThreads = parallel::detectCores(),

# Metadata args

OutputSelection = c("Importances", "EvalPlots", "EvalMetrics", "PDFs", "Score_TrainData"),

model_path = normalizePath("./"),

metadata_path = normalizePath("./"),

ModelID = "Test_Model_1",

EncodingMethod = "binary",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in%

c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumnName = NULL,

IDcols = c("IDcol_1","IDcol_2"),

# Model evaluation args

eval_metric = "merror",

LossFunction = 'multi:softprob',

grid_eval_metric = "accuracy",

NumOfParDepPlots = 3L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

BaselineComparison = "default",

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

Verbose = 1L,

DebugMode = FALSE,

# ML args

Trees = 50L,

eta = 0.05,

max_depth = 4L,

min_child_weight = 1.0,

subsample = 0.55,

colsample_bytree = 0.55)

LightGBM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Run function

TestModel <- AutoQuant::AutoLightGBMMultiClass(

# Metadata args

OutputSelection = c("Importances","EvalPlots","EvalMetrics","Score_TrainData"),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "Test_Model_1",

NumOfParDepPlots = 3L,

EncodingMethod = "credibility",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

DebugMode = FALSE,

# Data args

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c("IDcol_1","IDcol_2"),

# Grid parameters

GridTune = FALSE,

grid_eval_metric = 'microauc',

BaselineComparison = 'default',

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

PassInGrid = NULL,

# Core parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#core-parameters

input_model = NULL, # continue training a model that is stored to file

task = "train",

device_type = 'CPU',

NThreads = parallel::detectCores() / 2,

objective = 'multiclass',

multi_error_top_k = 1,

metric = 'multi_logloss',

boosting = 'gbdt',

LinearTree = FALSE,

Trees = 50L,

eta = NULL,

num_leaves = 31,

deterministic = TRUE,

# Learning Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#learning-control-parameters

force_col_wise = FALSE,

force_row_wise = FALSE,

max_depth = NULL,

min_data_in_leaf = 20,

min_sum_hessian_in_leaf = 0.001,

bagging_freq = 0,

bagging_fraction = 1.0,

feature_fraction = 1.0,

feature_fraction_bynode = 1.0,

extra_trees = FALSE,

early_stopping_round = 10,

first_metric_only = TRUE,

max_delta_step = 0.0,

lambda_l1 = 0.0,

lambda_l2 = 0.0,

linear_lambda = 0.0,

min_gain_to_split = 0,

drop_rate_dart = 0.10,

max_drop_dart = 50,

skip_drop_dart = 0.50,

uniform_drop_dart = FALSE,

top_rate_goss = FALSE,

other_rate_goss = FALSE,

monotone_constraints = NULL,

monotone_constraints_method = "advanced",

monotone_penalty = 0.0,

forcedsplits_filename = NULL, # use for AutoStack option; .json file

refit_decay_rate = 0.90,

path_smooth = 0.0,

# IO Dataset Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#io-parameters

max_bin = 255,

min_data_in_bin = 3,

data_random_seed = 1,

is_enable_sparse = TRUE,

enable_bundle = TRUE,

use_missing = TRUE,

zero_as_missing = FALSE,

two_round = FALSE,

# Convert Parameters

convert_model = NULL,

convert_model_language = "cpp",

# Objective Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#objective-parameters

boost_from_average = TRUE,

is_unbalance = FALSE,

scale_pos_weight = 1.0,

# Metric Parameters (metric is in Core)

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#metric-parameters

is_provide_training_metric = TRUE,

eval_at = c(1,2,3,4,5),

# Network Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#network-parameters

num_machines = 1,

# GPU Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#gpu-parameters

gpu_platform_id = -1,

gpu_device_id = -1,

gpu_use_dp = TRUE,

num_gpu = 1)

H2O-GBM Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = TRUE)

# Run function

TestModel <- AutoQuant::AutoH2oGBMMultiClass(

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

eval_metric = "logloss",

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./")),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

IfSaveModel = "mojo",

H2OShutdown = TRUE,

H2OStartUp = TRUE,

# Model args

GridTune = FALSE,

GridStrategy = "Cartesian",

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

MaxModelsInGrid = 2,

Trees = 50,

LearnRate = 0.10,

LearnRateAnnealing = 1,

eval_metric = "RMSE",

Distribution = "multinomial",

MaxDepth = 20,

SampleRate = 0.632,

ColSampleRate = 1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-DRF Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = TRUE)

# Run function

TestModel <- AutoQuant::AutoH2oDRFMultiClass(

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

eval_metric = "logloss",

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./")),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

IfSaveModel = "mojo",

H2OShutdown = FALSE,

H2OStartUp = TRUE,

# Grid Tuning Args

GridStrategy = "Cartesian",

GridTune = FALSE,

MaxModelsInGrid = 10,

MaxRuntimeSecs = 60*60*24,

StoppingRounds = 10,

# ML args

Trees = 50,

MaxDepth = 20,

SampleRate = 0.632,

MTries = -1,

ColSampleRatePerTree = 1,

ColSampleRatePerTreeLevel = 1,

MinRows = 1,

NBins = 20,

NBinsCats = 1024,

NBinsTopLevel = 1024,

HistogramType = "AUTO",

CategoricalEncoding = "AUTO")

H2O-GLM Example

# Create some dummy correlated data with numeric and categorical features

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = TRUE)

# Run function

TestModel <- AutoQuant::AutoH2oGLMMultiClass(

# Compute management

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

H2OShutdown = TRUE,

H2OStartUp = TRUE,

IfSaveModel = "mojo",

# Model evaluation:

eval_metric = "logloss",

NumOfParDepPlots = 3,

# Metadata arguments:

model_path = NULL,

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

# Data arguments:

data = data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

RandomColNumbers = NULL,

InteractionColNumbers = NULL,

WeightsColumn = NULL,

# Model args

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "multinomial",

Link = "family_default",

RandomDistribution = NULL,

RandomLink = NULL,

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

H2O-AutoML Example

# Create some dummy correlated data with numeric and categorical features

data <- AutoQuant::FakeDataGenerator(Correlation = 0.85, N = 1000, ID = 2, ZIP = 0, AddDate = FALSE, Classification = FALSE, MultiClass = TRUE)

# Run function

TestModel <- AutoQuant::AutoH2oMLMultiClass(

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

ExcludeAlgos = NULL,

eval_metric = "logloss",

Trees = 50,

MaxMem = "32G",

NThreads = max(1, parallel::detectCores()-2),

MaxModelsInGrid = 10,

model_path = normalizePath("./"),

metadata_path = file.path(normalizePath("./"), "MetaData"),

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

IfSaveModel = "mojo",

H2OShutdown = FALSE,

HurdleModel = FALSE)

H2O-GAM Example

# Create some dummy correlated data with numeric and categorical features

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 1000L,

ID = 2L,

ZIP = 0L,

AddDate = FALSE,

Classification = FALSE,

MultiClass = TRUE)

# Define GAM Columns to use - up to 9 are allowed

GamCols <- names(which(unlist(lapply(data, is.numeric))))

GamCols <- GamCols[!GamCols %in% c("Adrian","IDcol_1","IDcol_2")]

GamCols <- GamCols[1L:(min(9L,length(GamCols)))]

# Run function

TestModel <- AutoQuant::AutoH2oGAMMultiClass(

data,

TrainOnFull = FALSE,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = "Adrian",

FeatureColNames = names(data)[!names(data) %in% c("IDcol_1", "IDcol_2","Adrian")],

WeightsColumn = NULL,

GamColNames = GamCols,

eval_metric = "logloss",

MaxMem = {gc();paste0(as.character(floor(as.numeric(system("awk '/MemFree/ {print $2}' /proc/meminfo", intern=TRUE)) / 1000000)),"G")},

NThreads = max(1, parallel::detectCores()-2),

model_path = normalizePath("./"),

metadata_path = NULL,

ModelID = "FirstModel",

ReturnModelObjects = TRUE,

SaveModelObjects = FALSE,

IfSaveModel = "mojo",

H2OShutdown = FALSE,

H2OStartUp = TRUE,

# ML args

num_knots = NULL,

keep_gam_cols = TRUE,

GridTune = FALSE,

GridStrategy = "Cartesian",

StoppingRounds = 10,

MaxRunTimeSecs = 3600 * 24 * 7,

MaxModelsInGrid = 10,

Distribution = "multinomial",

Link = "Family_Default",

Solver = "AUTO",

Alpha = NULL,

Lambda = NULL,

LambdaSearch = FALSE,

NLambdas = -1,

Standardize = TRUE,

RemoveCollinearColumns = FALSE,

InterceptInclude = TRUE,

NonNegativeCoefficients = FALSE)

Model Scoring

Expand to view content

Scoring Description

AutoCatBoostScoring() is an automated scoring function that compliments the AutoCatBoost__() model training functions. This function requires you to supply features for scoring. It will run ModelDataPrep() to prepare your features for catboost data conversion and scoring. It will also handle and transformations and back-transformations if you utilized that feature in the regression training case.AutoXGBoostScoring() is an automated scoring function that compliments the AutoXGBoost__() model training functions. This function requires you to supply features for scoring. It will run ModelDataPrep() and the CategoricalEncoding() functions to prepare your features for xgboost data conversion and scoring. It will also handle and transformations and back-transformations if you utilized that feature in the regression training case.AutoLightGBMScoring() is an automated scoring function that compliments the AutoLightGBM__() model training functions. This function requires you to supply features for scoring. It will run ModelDataPrep() and the CategoricalEncoding() functions to prepare your features for lightgbm data conversion and scoring. It will also handle and transformations and back-transformations if you utilized that feature in the regression training case.AutoH2OMLScoring() is an automated scoring function that compliments the AutoH2oGBM__() and AutoH2oDRF__() model training functions. This function requires you to supply features for scoring. It will run ModelDataPrep()to prepare your features for H2O data conversion and scoring. It will also handle transformations and back-transformations if you utilized that feature in the regression training case and didn’t do it yourself before hand.AutoCatBoostHurdleModelScoring() for scoring models developed with AutoCatBoostHurdleModel()AutoLightGBMHurdleModelScoring() for scoring models developed with AutoLightGBMHurdleModel()AutoXGBoostHurdleModelScoring() for scoring models developed with AutoXGBoostHurdleModel()

AutoCatBoost__() Examples

AutoCatBoostRegression() Scoring Example

# Create some dummy correlated data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 10000,

ID = 2,

ZIP = 0,

AddDate = FALSE,

Classification = FALSE,

MultiClass = FALSE)

# Copy data

data1 <- data.table::copy(data)

# Feature Colnames

Features <- names(data1)[!names(data1) %in% c("IDcol_1", "IDcol_2","DateTime","Adrian")]

# Run function

TestModel <- AutoQuant::AutoCatBoostRegression(

# GPU or CPU and the number of available GPUs

TrainOnFull = FALSE,

task_type = 'CPU',

NumGPUs = 1,

DebugMode = FALSE,

# Metadata args

OutputSelection = c('Importances','EvalPlots','EvalMetrics','Score_TrainData'),

ModelID = 'Test_Model_1',

model_path = getwd(),

metadata_path = getwd(),

SaveModelObjects = FALSE,

SaveInfoToPDF = FALSE,

ReturnModelObjects = TRUE,

# Data args

data = data1,

ValidationData = NULL,

TestData = NULL,

TargetColumnName = 'Adrian',

FeatureColNames = Features,

PrimaryDateColumn = NULL,

WeightsColumnName = NULL,

IDcols = c('IDcol_1','IDcol_2'),

TransformNumericColumns = 'Adrian',

Methods = c('Asinh','Asin','Log','LogPlus1','Sqrt','Logit'),

# Model evaluation

eval_metric = 'RMSE',

eval_metric_value = 1.5,

loss_function = 'RMSE',

loss_function_value = 1.5,

MetricPeriods = 10L,

NumOfParDepPlots = ncol(data1)-1L-2L,

# Grid tuning args

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 60*60,

BaselineComparison = 'default',

# ML args

langevin = FALSE,

diffusion_temperature = 10000,

Trees = 1000,

Depth = 9,

L2_Leaf_Reg = NULL,

RandomStrength = 1,

BorderCount = 128,

LearningRate = NULL,

RSM = 1,

BootStrapType = NULL,

GrowPolicy = 'SymmetricTree',

model_size_reg = 0.5,

feature_border_type = 'GreedyLogSum',

sampling_unit = 'Object',

subsample = NULL,

score_function = 'Cosine',

min_data_in_leaf = 1)

# Insights Report

AutoQuant::ModelInsightsReport(

TrainDataInclude = TRUE,

FeatureColumnNames = Features,

SampleSize = 100000,

ModelObject = ModelObject,

ModelID = 'Test_Model_1',

OutputPath = getwd())

# Score data

Preds <- AutoQuant::AutoCatBoostScoring(

TargetType = 'regression',

ScoringData = data,

FeatureColumnNames = Features,

FactorLevelsList = TestModel$FactorLevelsList,

IDcols = c('IDcol_1','IDcol_2'),

OneHot = FALSE,

ReturnShapValues = TRUE,

ModelObject = TestModel$Model,

ModelPath = NULL,

ModelID = 'Test_Model_1',

ReturnFeatures = TRUE,

MultiClassTargetLevels = NULL,

TransformNumeric = FALSE,

BackTransNumeric = FALSE,

TargetColumnName = NULL,

TransformationObject = NULL,

TransID = NULL,

TransPath = NULL,

MDP_Impute = TRUE,

MDP_CharToFactor = TRUE,

MDP_RemoveDates = TRUE,

MDP_MissFactor = '0',

MDP_MissNum = -1,

RemoveModel = FALSE)

AutoCatBoostClassifier() Scoring Example

# Refresh data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 25000L,

ID = 2L,

AddWeightsColumn = TRUE,

ZIP = 0L,

AddDate = TRUE,

Classification = TRUE,

MultiClass = FALSE)

# Copy data (used for scoring below``)

data1 <- data.table::copy(data)

# Partition Data

Sets <- Rodeo::AutoDataPartition(

data = data,

NumDataSets = 3,

Ratios = c(0.7,0.2,0.1),

PartitionType = "random",

StratifyColumnNames = "Adrian",

TimeColumnName = NULL)

TTrainData <- Sets$TrainData

VValidationData <- Sets$ValidationData

TTestData <- Sets$TestData

rm(Sets)

# Feature Colnames

Features <- names(TTrainData)[!names(TTrainData) %in% c("IDcol_1", "IDcol_2","DateTime","Adrian")]

# AutoCatBoostClassifier

TestModel <- AutoQuant::AutoCatBoostClassifier(

# GPU or CPU and the number of available GPUs

task_type = "CPU",

NumGPUs = 1,

# Metadata arguments

OutputSelection = c("Importances", "EvalPlots", "EvalMetrics", "Score_TrainData"),

ModelID = "Test_Model_1",

model_path = normalizePath("./"),

metadata_path = normalizePath("./"),

SaveModelObjects = FALSE,

ReturnModelObjects = TRUE,

SaveInfoToPDF = FALSE,

# Data arguments

data = TTrainData,

TrainOnFull = FALSE,

ValidationData = VValidationData,

TestData = TTestData,

TargetColumnName = "Adrian",

FeatureColNames = Features,

PrimaryDateColumn = "DateTime",

WeightsColumnName = "Weights",

ClassWeights = c(1L,1L),

IDcols = c("IDcol_1","IDcol_2","DateTime"),

# Model evaluation

CostMatrixWeights = c(2,0,0,1),

EvalMetric = "MCC",

LossFunction = "Logloss",

grid_eval_metric = "Utility",

MetricPeriods = 10L,

NumOfParDepPlots = 3,

# Grid tuning arguments

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

BaselineComparison = "default",

# ML args

Trees = 100L,

Depth = 4L,

LearningRate = NULL,

L2_Leaf_Reg = NULL,

RandomStrength = 1,

BorderCount = 128,

RSM = 0.80,

BootStrapType = "Bayesian",

GrowPolicy = "SymmetricTree",

langevin = FALSE,

diffusion_temperature = 10000,

model_size_reg = 0.5,

feature_border_type = "GreedyLogSum",

sampling_unit = "Object",

subsample = NULL,

score_function = "Cosine",

min_data_in_leaf = 1,

DebugMode = TRUE)

# Insights Report

AutoQuant::ModelInsightsReport(

TrainDataInclude = TRUE,

FeatureColumnNames = Features,

SampleSize = 100000,

ModelObject = ModelObject,

ModelID = 'Test_Model_1',

OutputPath = getwd())

# Score data

Preds <- AutoQuant::AutoCatBoostScoring(

TargetType = 'classifier',

ScoringData = data,

FeatureColumnNames = Features,

FactorLevelsList = TestModel$FactorLevelsList,

IDcols = c("IDcol_1","IDcol_2","DateTime"),

OneHot = FALSE,

ReturnShapValues = TRUE,

ModelObject = TestModel$Model,

ModelPath = NULL,

ModelID = 'Test_Model_1',

ReturnFeatures = TRUE,

MultiClassTargetLevels = NULL,

TransformNumeric = FALSE,

BackTransNumeric = FALSE,

TargetColumnName = NULL,

TransformationObject = NULL,

TransID = NULL,

TransPath = NULL,

MDP_Impute = TRUE,

MDP_CharToFactor = TRUE,

MDP_RemoveDates = TRUE,

MDP_MissFactor = '0',

MDP_MissNum = -1,

RemoveModel = FALSE)

AutoCatBoostMultiClasss() Scoring Example

# Refresh data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 25000L,

ID = 2L,

AddWeightsColumn = TRUE,

ZIP = 0L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = TRUE)

# Copy data (used for scoring below``)

data1 <- data.table::copy(data)

# Partition Data

Sets <- Rodeo::AutoDataPartition(

data = data,

NumDataSets = 3,

Ratios = c(0.7,0.2,0.1),

PartitionType = "random",

StratifyColumnNames = "Adrian",

TimeColumnName = NULL)

TTrainData <- Sets$TrainData

VValidationData <- Sets$ValidationData

TTestData <- Sets$TestData

rm(Sets)

# Feature Colnames

Features <- names(TTrainData)[!names(TTrainData) %in% c("IDcol_1", "IDcol_2","Adrian","DateTime")]

# Run function

TestModel <- AutoQuant::AutoCatBoostMultiClass(

# GPU or CPU and the number of available GPUs

task_type = "GPU",

NumGPUs = 1,

# Metadata arguments

OutputSelection = c("Importances", "EvalPlots", "EvalMetrics", "Score_TrainData"),

ModelID = "Test_Model_1",

model_path = normalizePath("./"),

metadata_path = normalizePath("./"),

SaveModelObjects = FALSE,

ReturnModelObjects = TRUE,

# Data arguments

data = TTrainData,

TrainOnFull = FALSE,

ValidationData = VValidationData,

TestData = TTestData,

TargetColumnName = "Adrian",

FeatureColNames = Features,

PrimaryDateColumn = "DateTime",

WeightsColumnName = "Weights",

ClassWeights = c(1L,1L,1L,1L,1L),

IDcols = c("IDcol_1","IDcol_2","DateTime"),

# Model evaluation

eval_metric = "MCC",

loss_function = "MultiClassOneVsAll",

grid_eval_metric = "Accuracy",

MetricPeriods = 10L,

# Grid tuning arguments

PassInGrid = NULL,

GridTune = FALSE,

MaxModelsInGrid = 30L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

BaselineComparison = "default",

# ML args

Trees = 100L,

Depth = 4L,

LearningRate = 0.01,

L2_Leaf_Reg = 1.0,

RandomStrength = 1,

BorderCount = 128,

langevin = FALSE,

diffusion_temperature = 10000,

RSM = 0.80,

BootStrapType = "Bayesian",

GrowPolicy = "SymmetricTree",

model_size_reg = 0.5,

feature_border_type = "GreedyLogSum",

sampling_unit = "Group",

subsample = NULL,

score_function = "Cosine",

min_data_in_leaf = 1,

DebugMode = TRUE)

# Insights Report

AutoQuant::ModelInsightsReport(

TrainDataInclude = TRUE,

FeatureColumnNames = Features,

SampleSize = 100000,

ModelObject = ModelObject,

ModelID = 'Test_Model_1',

OutputPath = getwd())

# Score data

Preds <- AutoQuant::AutoCatBoostScoring(

TargetType = 'multiclass',

ScoringData = data,

FeatureColumnNames = Features,

FactorLevelsList = TestModel$FactorLevelsList,

IDcols = c("IDcol_1","IDcol_2","DateTime"),

OneHot = FALSE,

ReturnShapValues = FALSE,

ModelObject = TestModel$Model,

ModelPath = NULL,

ModelID = 'Test_Model_1',

ReturnFeatures = TRUE,

MultiClassTargetLevels = TestModel$TargetLevels,

TransformNumeric = FALSE,

BackTransNumeric = FALSE,

TargetColumnName = NULL,

TransformationObject = NULL,

TransID = NULL,

TransPath = NULL,

MDP_Impute = TRUE,

MDP_CharToFactor = TRUE,

MDP_RemoveDates = TRUE,

MDP_MissFactor = '0',

MDP_MissNum = -1,

RemoveModel = FALSE)

AutoLightGBM__() Examples

AutoLightGBMRegression() Scoring Example

# Refresh data

data <- AutoQuant::FakeDataGenerator(

Correlation = 0.85,

N = 25000L,

ID = 2L,

AddWeightsColumn = TRUE,

ZIP = 0L,

AddDate = TRUE,

Classification = FALSE,

MultiClass = FALSE)

# Partition Data

Sets <- Rodeo::AutoDataPartition(

data = data,

NumDataSets = 3,

Ratios = c(0.7,0.2,0.1),

PartitionType = "random",

StratifyColumnNames = "Adrian",

TimeColumnName = NULL)

TTrainData <- Sets$TrainData

VValidationData <- Sets$ValidationData

TTestData <- Sets$TestData

rm(Sets)

# Run function

TestModel <- AutoQuant::AutoLightGBMRegression(

# GPU or CPU

NThreads = parallel::detectCores(),

# Metadata args

OutputSelection = c("Importances","EvalPlots","EvalMetrics","Score_TrainData"),

model_path = getwd(),

metadata_path = getwd(),

ModelID = "Test_Model_1",

NumOfParDepPlots = 3L,

EncodingMethod = "credibility",

ReturnFactorLevels = TRUE,

ReturnModelObjects = TRUE,

SaveModelObjects = TRUE,

SaveInfoToPDF = FALSE,

DebugMode = TRUE,

# Data args

data = TTrainData,

TrainOnFull = FALSE,

ValidationData = VValidationData,

TestData = TTestData,

TargetColumnName = "Adrian",

FeatureColNames = names(TTrainData)[!names(TTrainData) %in% c("IDcol_1", "IDcol_2","DateTime","Adrian")],

PrimaryDateColumn = "DateTime",

WeightsColumnName = "Weights",

IDcols = c("IDcol_1","IDcol_2","DateTime"),

TransformNumericColumns = NULL,

Methods = c("Asinh","Asin","Log","LogPlus1","Sqrt","Logit"),

# Grid parameters

GridTune = FALSE,

grid_eval_metric = "r2",

BaselineComparison = "default",

MaxModelsInGrid = 10L,

MaxRunsWithoutNewWinner = 20L,

MaxRunMinutes = 24L*60L,

PassInGrid = NULL,

# Core parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#core-parameters

input_model = NULL, # continue training a model that is stored to file

task = "train",

device_type = "CPU",

objective = 'regression',

metric = "rmse",

boosting = "gbdt",

LinearTree = FALSE,

Trees = 50L,

eta = NULL,

num_leaves = 31,

deterministic = TRUE,

# Learning Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#learning-control-parameters

force_col_wise = FALSE,

force_row_wise = FALSE,

max_depth = 6,

min_data_in_leaf = 20,

min_sum_hessian_in_leaf = 0.001,

bagging_freq = 1.0,

bagging_fraction = 1.0,

feature_fraction = 1.0,

feature_fraction_bynode = 1.0,

lambda_l1 = 0.0,

lambda_l2 = 0.0,

extra_trees = FALSE,

early_stopping_round = 10,

first_metric_only = TRUE,

max_delta_step = 0.0,

linear_lambda = 0.0,

min_gain_to_split = 0,

drop_rate_dart = 0.10,

max_drop_dart = 50,

skip_drop_dart = 0.50,

uniform_drop_dart = FALSE,

top_rate_goss = FALSE,

other_rate_goss = FALSE,

monotone_constraints = NULL,

monotone_constraints_method = "advanced",

monotone_penalty = 0.0,

forcedsplits_filename = NULL, # use for AutoStack option; .json file

refit_decay_rate = 0.90,

path_smooth = 0.0,

# IO Dataset Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#io-parameters

max_bin = 255,

min_data_in_bin = 3,

data_random_seed = 1,

is_enable_sparse = TRUE,

enable_bundle = TRUE,

use_missing = TRUE,

zero_as_missing = FALSE,

two_round = FALSE,

# Convert Parameters

convert_model = NULL,

convert_model_language = "cpp",

# Objective Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#objective-parameters

boost_from_average = TRUE,

alpha = 0.90,

fair_c = 1.0,

poisson_max_delta_step = 0.70,

tweedie_variance_power = 1.5,

lambdarank_truncation_level = 30,

# Metric Parameters (metric is in Core)

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#metric-parameters

is_provide_training_metric = TRUE,

eval_at = c(1,2,3,4,5),

# Network Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#network-parameters

num_machines = 1,

# GPU Parameters

# https://lightgbm.readthedocs.io/en/latest/Parameters.html#gpu-parameters

gpu_platform_id = -1,

gpu_device_id = -1,

gpu_use_dp = TRUE,

num_gpu = 1)

# Outcome

ModelID = "Test_Model_1"

colnames <- data.table::fread(file = file.path(getwd(), paste0(ModelID, "_ColNames.csv")))

Preds <- AutoQuant::AutoLightGBMScoring(

TargetType = "regression",

ScoringData = TTestData,

ReturnShapValues = FALSE,